Contextual Anomaly Detection by Correlated Probability Distributions using Kullback-Leibler Divergence

Contextual Anomaly

Contextual Anomaly

Abstract

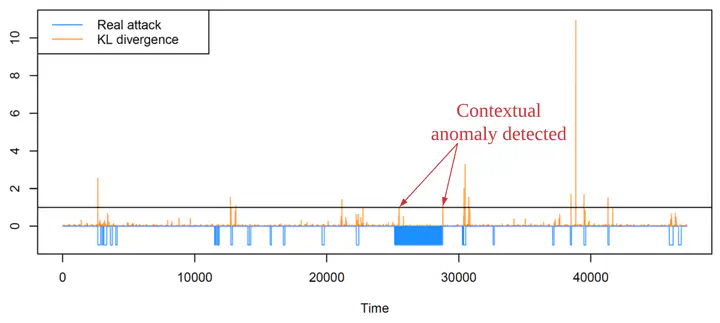

We investigate anomaly detection in Cyber-Physical System (CPS), where often anomalies are attacks to CPS to disrupt the operations of critical infrastructures. We use secure water treatment (SWaT) systems dataset, where normal and attack states are simulated at the water tanks. Among different types of anomalies, we focus on detecting contextual anomaly, which can be difficult to detect from Out-Of-Limit threshold method. Recent research shows the promising result in detecting anomalies from analyzing error distributions from the machine learning classifier. In a similar way, we statistically analyze prediction error patterns from RNN and MDN classifiers to detect anomalies. First, we generate anomaly scores with Local Outlier Factor (LOF) and remove point anomalies. With the fixed window size, empirical probability distribution is estimated, and we apply the sliding window to measure the difference of probability distributions between the other windows. To measure the difference efficiently between anomalies and normal data, we use Kullback-Leibler divergence. Our preliminary result shows that we can effectively detect contextual anomalies compared with Nearest Neighbor Distance (NND) approach.

Cho, Jinwoo, Shahroz Tariq, Sangyup Lee, Young Geun Kim, Jeong-Han Yun, Jonguk Kim, Hyoung Chun Kim, and Simon S. Woo. 2019. “Contextual Anomaly Detection by Correlated Probability Distributions Using Kullback-Leibler Divergence.” Anchorage, Alaska, USA.